Improve your health

Improve your health

Improve your health

14. Dezember 2025

Multimodal Signals in Stress Monitoring

Stress is more than just a feeling - it’s a physical response that impacts your heart, skin, temperature, and even brain activity. Traditional methods like self-reports and doctor visits often miss these rapid shifts. That’s where multimodal monitoring comes in, combining data from multiple sensors to provide a clearer, real-time view of stress.

Here’s what you need to know:

What It Tracks: Signals like heart rate, EDA (electrodermal activity), skin temperature, accelerometer (movement), EEG (brain activity), and ECG (heart patterns).

Why It Works: Multimodal systems outperform single-signal methods, with detection accuracy reaching up to 98.6%.

How It’s Done: Wearables use deep learning models like MMFD-SD to analyze combined data, even when some signals are incomplete or noisy.

Real-Life Applications: From smartwatches to AI health apps like Healify, these tools deliver stress alerts and personalized advice - think breathing exercises or lifestyle adjustments.

Multimodal monitoring isn’t perfect yet. Challenges like individual differences, false positives, and limited datasets remain, but advancements in AI and wearable tech are making stress management more accessible and actionable.

Multimodal Signals and Feature Extraction Methods

Main Physiological Signals: EDA, HR, Accelerometer, Skin Temperature, EEG, ECG

The human body reacts to stress by producing specific physiological signals, and wearable sensors have made it possible to monitor these responses in real time. Electrodermal activity (EDA), heart rate (HR), and ECG (electrocardiography) are key indicators that reflect shifts in heart rate variability (HRV), signaling transitions between sympathetic and parasympathetic nervous system activity [1][2].

An accelerometer tracks movement along three axes (X, Y, and Z), helping to differentiate stress-related movements from regular physical activity. Skin temperature tends to decrease during acute stress due to peripheral vasoconstriction [1][3]. Additionally, EEG (electroencephalography) captures brain activity, revealing changes in cognitive load and emotional states [1][2].

These physiological signals serve as the building blocks for extracting meaningful features, which are essential for developing the deep learning models discussed later.

Methods for Extracting Features

To make these physiological signals useful, raw data must be converted into measurable features. Time-domain techniques analyze metrics like the mean, standard deviation, and HRV indices such as SDNN (standard deviation of NN intervals) and RMSSD (root mean square of successive differences). Frequency-domain methods, often employing Fast Fourier Transform (FFT), measure spectral power within specific frequency bands. For heart rate analysis, HRV metrics like SDNN and RMSSD are commonly used. In EDA data, researchers typically evaluate the number and amplitude of skin conductance responses over a set time frame, usually 30 to 60 seconds [1][3].

When analyzing HRV, frequency-domain methods assess power in low- and high-frequency bands. Stress is frequently linked to reduced high-frequency power and an elevated LF/HF ratio, reflecting increased sympathetic nervous system activity [1][2]. Advanced frameworks like MMFD-SD utilize parallel convolutional neural network (CNN) branches to separately process time- and frequency-domain features, allowing for a more comprehensive analysis of each signal's distinct characteristics [1][5].

Stress Detection from wearable Sensor data using Deep Learning Methodologies - SEBASTIAN DABERDAKU

Deep Learning Models for Multimodal Stress Detection

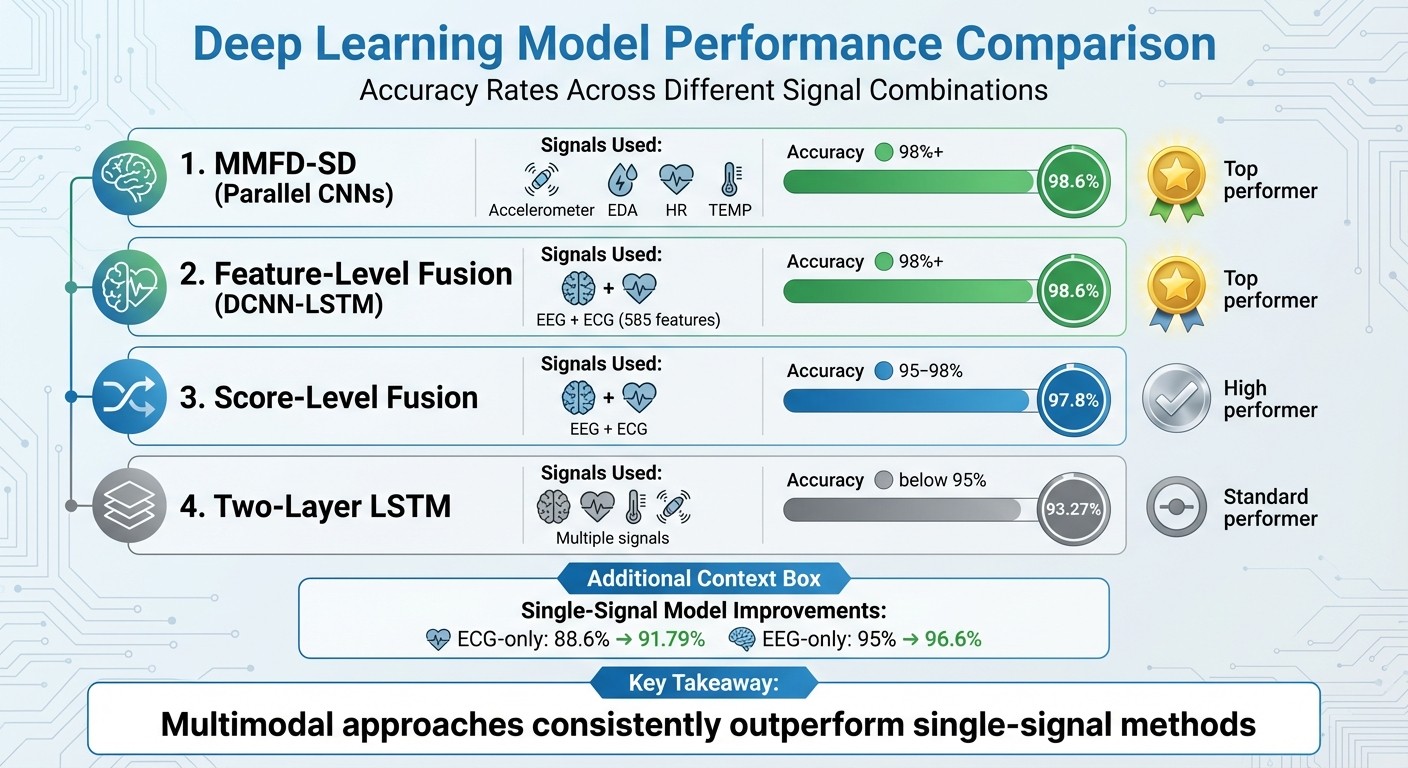

Deep Learning Model Performance Comparison for Multimodal Stress Detection

The MMFD-SD Framework

The MMFD-SD (Multimodal Deep Learning-based Stress Detection) framework represents a leap forward in how wearable technology can identify stress in everyday environments. Originally developed for high-stress occupations like nursing, this model analyzes four key physiological signals: accelerometer data (capturing motion on X, Y, and Z axes), electrodermal activity (EDA), heart rate (HR), and skin temperature (TEMP) [1].

What sets MMFD-SD apart is its use of parallel CNN branches to process both time-domain patterns and frequency features derived via FFT. Each branch focuses on preserving the unique characteristics of its respective signal, and the outputs are then combined to create a unified representation [1]. This design makes the framework especially effective for real-world applications, where data collection can be inconsistent, as is often the case with wearable devices [1].

Performance Metrics and Model Comparisons

MMFD-SD achieved an impressive 98.6% accuracy in stress detection, equaling the performance of other leading models while focusing on signals readily available from consumer-grade wearables [1]. This accuracy surpasses that of a two-layer LSTM model, which reached 93.27%, and matches the hybrid DCNN-LSTM model that uses EEG and ECG data [2].

Other models have also demonstrated strong performance when optimized for specific signal types. For instance, ECG-only models improved their accuracy from 88.6% to 91.79%, while EEG-only models saw a jump from 95% to 96.6% [2]. These results highlight the advantage of combining multiple signals, as multimodal approaches consistently outperform single-signal methods. However, the choice of signals and the techniques used to integrate them play a critical role in determining overall performance.

Model Type | Signals Used | Accuracy |

|---|---|---|

MMFD-SD (parallel CNNs) | Accelerometer, EDA, HR, TEMP | 98.6% [1] |

Feature-level fusion (DCNN-LSTM) | EEG + ECG (585 features) | 98.6% [2] |

Score-level fusion | EEG + ECG | 97.8% [2] |

Two-layer LSTM | Multiple signals | 93.27% [2] |

These results underscore the importance of exploring fusion techniques for integrating multimodal signals to enhance stress detection systems.

Fusion Techniques for Combining Multimodal Signals

Feature-Level Fusion vs. Score-Level Fusion

Deep learning frameworks have paved the way for advanced fusion techniques to enhance multimodal stress detection. These techniques focus on integrating data from various physiological signals, each with its own strengths.

Feature-level fusion combines raw or lightly processed data from multiple signals early in the analysis process. This allows the model to uncover complex relationships between the signals. For instance, one study combined 513 EEG features with 72 ECG features, achieving an impressive 98.6% accuracy in stress detection[2].

On the other hand, score-level fusion takes a different approach. Each signal is processed independently through its own model to generate stress probability scores. These scores are then combined - often using weighted averaging - to produce the final result. While slightly less accurate than feature-level fusion (97.8% accuracy with EEG and ECG data), score-level fusion still surpasses older methods like LSTM-based models, which achieved 93.27% accuracy[2].

The key difference lies in the timing of data integration: feature-level fusion integrates signals early, capturing hidden correlations, while score-level fusion keeps the signals separate until the final decision stage. Both methods play a critical role in advancing real-time stress detection, particularly for wearable technology.

Benefits and Drawbacks of Fusion Methods

Feature-level fusion stands out for its ability to capture complementary details across different signals. For example, combining time-domain statistics (like the mean and standard deviation from heart rate and EDA) with frequency-domain features (such as spectral power from accelerometer data) enables the model to detect intricate stress patterns that single signals might miss[1][2].

However, this method has its challenges. It requires significant computational resources, which can be a hurdle for real-time processing on wearable devices. Managing large feature sets - like the 585 combined EEG and ECG features - can strain resource-limited devices and complicate real-time monitoring[2]. High-dimensional data can also pose performance issues if not managed with effective feature selection techniques.

Score-level fusion, by contrast, processes signals in parallel, making it more efficient and better suited for devices with limited resources[1][2]. This approach is ideal for consumer wearables that need to deliver quick stress alerts while conserving battery life. However, its simplicity comes at a cost - it may overlook the subtle inter-signal interactions that feature-level fusion can capture.

Both methods have their strengths and trade-offs, with the choice often depending on the specific application and hardware constraints.

Applications in Wearables and Future Directions

The advancements in fusion techniques have paved the way for practical applications of multimodal stress monitoring, especially in wearable technology.

Practical Uses in Wearable Devices

Modern wearable devices leverage data from multiple sensors, including heart rate variability, electrodermal activity, accelerometers, and skin temperature, to monitor stress levels continuously. These devices operate seamlessly in the background, presenting stress data in simple categories like "calm", "medium stress", or "high stress." Users can also access daily or weekly summaries on a connected device. Research in areas such as healthcare and transportation has shown that combining data from multiple sensors with deep learning models is far more effective at detecting stress in real time than relying solely on heart rate analysis [1][3][4].

When stress levels cross a certain threshold, wearables can send notifications and suggest quick interventions. These might include guided breathing exercises lasting a few minutes, reminders to take a short walk, or prompts for microbreaks. Some devices also provide time-of-day heatmaps, helping users spot patterns - like stress spikes linked to specific activities, poor sleep, or caffeine consumption [4].

While wearables excel at delivering immediate stress alerts, AI-driven health coaching apps take this a step further by offering personalized insights and guidance.

AI Health Coaching Apps Like Healify

Healify enhances what wearables offer by integrating their multimodal data with additional inputs like bloodwork results, lifestyle habits, and mood logs. Using deep learning techniques similar to those employed in multimodal stress analysis, Healify identifies patterns across diverse data streams. For instance, it can link stress trends to specific workdays, glucose fluctuations, or even sleep deprivation that often precedes heightened stress.

What sets Healify apart is its ability to provide tailored recommendations instead of generic advice. It considers factors such as individual schedules, time zones, and workplace norms to offer actionable insights. For example, the app might suggest a personalized evening routine to help unwind or adjust caffeine intake based on stress levels. Healify also delivers timely nudges, like recommending a quick breathing exercise before a stressful meeting, turning complex data into practical, user-friendly guidance.

Despite these advancements, there are still hurdles to overcome and areas ripe for further research.

Current Challenges and Research Opportunities

The journey toward perfecting multimodal stress monitoring is not without its challenges. Individual differences and the variety of wearable devices make personalization a complex task [4]. Additionally, external factors like physical activity, extreme temperatures, or illness can mimic stress signals, leading to potential false positives. To address this, models need to incorporate contextual data, such as physical activity levels, environmental conditions, and occasional user input, to improve accuracy [3][4].

Another issue lies in the datasets used for training these models. Many are based on small, homogenous groups or controlled laboratory settings, which may not reflect the diverse real-world conditions across the U.S. [1][2][3][4]. Researchers are exploring advanced techniques, such as domain adaptation, transfer learning, and on-device continuous learning, to make global models more adaptable to individual users while maintaining data privacy [1][2][4]. Future advancements may involve integrating smartphone data - like location, app usage, and calendar activities - to differentiate between stress caused by work, social interactions, or financial pressures. Additionally, developing richer datasets collected over longer periods and tied to everyday experiences will be key to improving the reliability of these systems [3][4].

Conclusion

The advancements discussed highlight how multimodal approaches are reshaping stress monitoring. By combining signals like heart rate, EDA, skin temperature, accelerometer data, and EEG/ECG, these systems achieve far better stress detection compared to single-sensor methods. Research consistently shows that multimodal systems deliver higher accuracy, often surpassing the typical high 80s to low 90s accuracy range of single-signal approaches. This improvement significantly reduces false alarms, making these systems more reliable for users navigating the unpredictable conditions of daily life with smartwatches or fitness trackers.

Deep learning frameworks, such as MMFD-SD, further showcase the practicality of multimodal systems by handling intermittently collected data. This makes them ideal for everyday wearables, enabling devices to alert users to rising stress levels even before they consciously recognize it. By blending advanced analytics with usability, these systems provide actionable insights that can be seamlessly integrated into daily routines.

On top of the technical advancements, AI-driven health coaching turns this data into meaningful, real-time support. Tools like Healify go beyond presenting raw numbers by offering personalized interventions. For example, wearable data is combined with broader health metrics to deliver tailored recommendations - like a quick breathing exercise before a meeting or a customized evening wind-down routine. These features help users shift from simply tracking stress to actively managing it, fostering better habits and improved sleep.

However, challenges remain in moving from prototypes to fully integrated, real-world solutions. Current datasets are often small and lack diversity, limiting the system’s ability to generalize. Future efforts must focus on creating large, diverse datasets, developing context-aware models that can distinguish stress from physical activity, and ensuring on-device processing is efficient, preserving battery life and user privacy. The path forward is clear: multimodal, AI-enhanced wearables are transforming stress and mental health care into continuous, real-world support. These tools meet people wherever they are - whether at work, at home, or on the go - helping them build healthier and more resilient lifestyles.

FAQs

How do multimodal signals enhance the accuracy of stress monitoring?

Multimodal signals improve stress monitoring by blending data from different physiological markers like heart rate variability, skin conductance, and cortisol levels. This combination creates a fuller picture of the body's stress response, leading to more accurate detection.

When these signals are analyzed together, it helps distinguish true stress reactions from false alarms, providing a clearer insight into both physical and mental health.

What are the main challenges of using multimodal signals for stress monitoring?

Multimodal stress monitoring systems come with their fair share of challenges. One major hurdle is combining data from various sensors while maintaining accuracy and reliability. This becomes even trickier when you factor in individual differences - people’s physiology and stress responses can vary significantly, making it tough to design systems that work effectively for everyone.

Another challenge lies in real-time data processing. These systems need to handle and analyze massive amounts of information quickly to deliver actionable insights without delay. On top of that, protecting user privacy and ensuring strong data security measures is essential, given the sensitive nature of the health information these systems often manage.

How does Healify use multimodal data to provide personalized health advice?

Healify taps into multimodal data - like physiological signals, activity levels, sleep habits, and stress markers - to provide health advice that's tailored to each individual. By analyzing real-time information from wearables and other sources, it detects patterns such as stress or fatigue and suggests practical ways to improve areas like sleep, nutrition, hydration, and overall wellness.

This approach transforms complex health data into actionable steps, helping users enhance both their physical and mental well-being.

Related Blog Posts

Stress is more than just a feeling - it’s a physical response that impacts your heart, skin, temperature, and even brain activity. Traditional methods like self-reports and doctor visits often miss these rapid shifts. That’s where multimodal monitoring comes in, combining data from multiple sensors to provide a clearer, real-time view of stress.

Here’s what you need to know:

What It Tracks: Signals like heart rate, EDA (electrodermal activity), skin temperature, accelerometer (movement), EEG (brain activity), and ECG (heart patterns).

Why It Works: Multimodal systems outperform single-signal methods, with detection accuracy reaching up to 98.6%.

How It’s Done: Wearables use deep learning models like MMFD-SD to analyze combined data, even when some signals are incomplete or noisy.

Real-Life Applications: From smartwatches to AI health apps like Healify, these tools deliver stress alerts and personalized advice - think breathing exercises or lifestyle adjustments.

Multimodal monitoring isn’t perfect yet. Challenges like individual differences, false positives, and limited datasets remain, but advancements in AI and wearable tech are making stress management more accessible and actionable.

Multimodal Signals and Feature Extraction Methods

Main Physiological Signals: EDA, HR, Accelerometer, Skin Temperature, EEG, ECG

The human body reacts to stress by producing specific physiological signals, and wearable sensors have made it possible to monitor these responses in real time. Electrodermal activity (EDA), heart rate (HR), and ECG (electrocardiography) are key indicators that reflect shifts in heart rate variability (HRV), signaling transitions between sympathetic and parasympathetic nervous system activity [1][2].

An accelerometer tracks movement along three axes (X, Y, and Z), helping to differentiate stress-related movements from regular physical activity. Skin temperature tends to decrease during acute stress due to peripheral vasoconstriction [1][3]. Additionally, EEG (electroencephalography) captures brain activity, revealing changes in cognitive load and emotional states [1][2].

These physiological signals serve as the building blocks for extracting meaningful features, which are essential for developing the deep learning models discussed later.

Methods for Extracting Features

To make these physiological signals useful, raw data must be converted into measurable features. Time-domain techniques analyze metrics like the mean, standard deviation, and HRV indices such as SDNN (standard deviation of NN intervals) and RMSSD (root mean square of successive differences). Frequency-domain methods, often employing Fast Fourier Transform (FFT), measure spectral power within specific frequency bands. For heart rate analysis, HRV metrics like SDNN and RMSSD are commonly used. In EDA data, researchers typically evaluate the number and amplitude of skin conductance responses over a set time frame, usually 30 to 60 seconds [1][3].

When analyzing HRV, frequency-domain methods assess power in low- and high-frequency bands. Stress is frequently linked to reduced high-frequency power and an elevated LF/HF ratio, reflecting increased sympathetic nervous system activity [1][2]. Advanced frameworks like MMFD-SD utilize parallel convolutional neural network (CNN) branches to separately process time- and frequency-domain features, allowing for a more comprehensive analysis of each signal's distinct characteristics [1][5].

Stress Detection from wearable Sensor data using Deep Learning Methodologies - SEBASTIAN DABERDAKU

Deep Learning Models for Multimodal Stress Detection

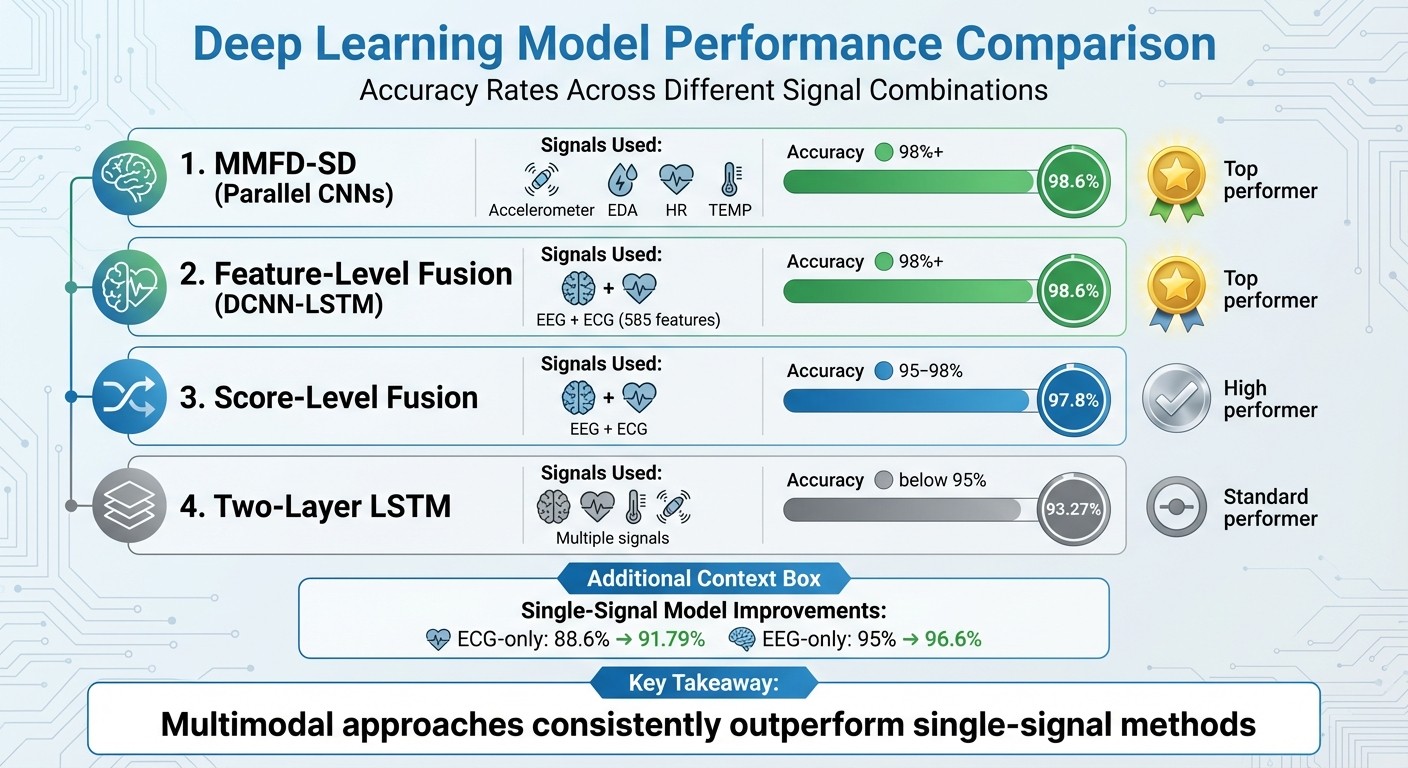

Deep Learning Model Performance Comparison for Multimodal Stress Detection

The MMFD-SD Framework

The MMFD-SD (Multimodal Deep Learning-based Stress Detection) framework represents a leap forward in how wearable technology can identify stress in everyday environments. Originally developed for high-stress occupations like nursing, this model analyzes four key physiological signals: accelerometer data (capturing motion on X, Y, and Z axes), electrodermal activity (EDA), heart rate (HR), and skin temperature (TEMP) [1].

What sets MMFD-SD apart is its use of parallel CNN branches to process both time-domain patterns and frequency features derived via FFT. Each branch focuses on preserving the unique characteristics of its respective signal, and the outputs are then combined to create a unified representation [1]. This design makes the framework especially effective for real-world applications, where data collection can be inconsistent, as is often the case with wearable devices [1].

Performance Metrics and Model Comparisons

MMFD-SD achieved an impressive 98.6% accuracy in stress detection, equaling the performance of other leading models while focusing on signals readily available from consumer-grade wearables [1]. This accuracy surpasses that of a two-layer LSTM model, which reached 93.27%, and matches the hybrid DCNN-LSTM model that uses EEG and ECG data [2].

Other models have also demonstrated strong performance when optimized for specific signal types. For instance, ECG-only models improved their accuracy from 88.6% to 91.79%, while EEG-only models saw a jump from 95% to 96.6% [2]. These results highlight the advantage of combining multiple signals, as multimodal approaches consistently outperform single-signal methods. However, the choice of signals and the techniques used to integrate them play a critical role in determining overall performance.

Model Type | Signals Used | Accuracy |

|---|---|---|

MMFD-SD (parallel CNNs) | Accelerometer, EDA, HR, TEMP | 98.6% [1] |

Feature-level fusion (DCNN-LSTM) | EEG + ECG (585 features) | 98.6% [2] |

Score-level fusion | EEG + ECG | 97.8% [2] |

Two-layer LSTM | Multiple signals | 93.27% [2] |

These results underscore the importance of exploring fusion techniques for integrating multimodal signals to enhance stress detection systems.

Fusion Techniques for Combining Multimodal Signals

Feature-Level Fusion vs. Score-Level Fusion

Deep learning frameworks have paved the way for advanced fusion techniques to enhance multimodal stress detection. These techniques focus on integrating data from various physiological signals, each with its own strengths.

Feature-level fusion combines raw or lightly processed data from multiple signals early in the analysis process. This allows the model to uncover complex relationships between the signals. For instance, one study combined 513 EEG features with 72 ECG features, achieving an impressive 98.6% accuracy in stress detection[2].

On the other hand, score-level fusion takes a different approach. Each signal is processed independently through its own model to generate stress probability scores. These scores are then combined - often using weighted averaging - to produce the final result. While slightly less accurate than feature-level fusion (97.8% accuracy with EEG and ECG data), score-level fusion still surpasses older methods like LSTM-based models, which achieved 93.27% accuracy[2].

The key difference lies in the timing of data integration: feature-level fusion integrates signals early, capturing hidden correlations, while score-level fusion keeps the signals separate until the final decision stage. Both methods play a critical role in advancing real-time stress detection, particularly for wearable technology.

Benefits and Drawbacks of Fusion Methods

Feature-level fusion stands out for its ability to capture complementary details across different signals. For example, combining time-domain statistics (like the mean and standard deviation from heart rate and EDA) with frequency-domain features (such as spectral power from accelerometer data) enables the model to detect intricate stress patterns that single signals might miss[1][2].

However, this method has its challenges. It requires significant computational resources, which can be a hurdle for real-time processing on wearable devices. Managing large feature sets - like the 585 combined EEG and ECG features - can strain resource-limited devices and complicate real-time monitoring[2]. High-dimensional data can also pose performance issues if not managed with effective feature selection techniques.

Score-level fusion, by contrast, processes signals in parallel, making it more efficient and better suited for devices with limited resources[1][2]. This approach is ideal for consumer wearables that need to deliver quick stress alerts while conserving battery life. However, its simplicity comes at a cost - it may overlook the subtle inter-signal interactions that feature-level fusion can capture.

Both methods have their strengths and trade-offs, with the choice often depending on the specific application and hardware constraints.

Applications in Wearables and Future Directions

The advancements in fusion techniques have paved the way for practical applications of multimodal stress monitoring, especially in wearable technology.

Practical Uses in Wearable Devices

Modern wearable devices leverage data from multiple sensors, including heart rate variability, electrodermal activity, accelerometers, and skin temperature, to monitor stress levels continuously. These devices operate seamlessly in the background, presenting stress data in simple categories like "calm", "medium stress", or "high stress." Users can also access daily or weekly summaries on a connected device. Research in areas such as healthcare and transportation has shown that combining data from multiple sensors with deep learning models is far more effective at detecting stress in real time than relying solely on heart rate analysis [1][3][4].

When stress levels cross a certain threshold, wearables can send notifications and suggest quick interventions. These might include guided breathing exercises lasting a few minutes, reminders to take a short walk, or prompts for microbreaks. Some devices also provide time-of-day heatmaps, helping users spot patterns - like stress spikes linked to specific activities, poor sleep, or caffeine consumption [4].

While wearables excel at delivering immediate stress alerts, AI-driven health coaching apps take this a step further by offering personalized insights and guidance.

AI Health Coaching Apps Like Healify

Healify enhances what wearables offer by integrating their multimodal data with additional inputs like bloodwork results, lifestyle habits, and mood logs. Using deep learning techniques similar to those employed in multimodal stress analysis, Healify identifies patterns across diverse data streams. For instance, it can link stress trends to specific workdays, glucose fluctuations, or even sleep deprivation that often precedes heightened stress.

What sets Healify apart is its ability to provide tailored recommendations instead of generic advice. It considers factors such as individual schedules, time zones, and workplace norms to offer actionable insights. For example, the app might suggest a personalized evening routine to help unwind or adjust caffeine intake based on stress levels. Healify also delivers timely nudges, like recommending a quick breathing exercise before a stressful meeting, turning complex data into practical, user-friendly guidance.

Despite these advancements, there are still hurdles to overcome and areas ripe for further research.

Current Challenges and Research Opportunities

The journey toward perfecting multimodal stress monitoring is not without its challenges. Individual differences and the variety of wearable devices make personalization a complex task [4]. Additionally, external factors like physical activity, extreme temperatures, or illness can mimic stress signals, leading to potential false positives. To address this, models need to incorporate contextual data, such as physical activity levels, environmental conditions, and occasional user input, to improve accuracy [3][4].

Another issue lies in the datasets used for training these models. Many are based on small, homogenous groups or controlled laboratory settings, which may not reflect the diverse real-world conditions across the U.S. [1][2][3][4]. Researchers are exploring advanced techniques, such as domain adaptation, transfer learning, and on-device continuous learning, to make global models more adaptable to individual users while maintaining data privacy [1][2][4]. Future advancements may involve integrating smartphone data - like location, app usage, and calendar activities - to differentiate between stress caused by work, social interactions, or financial pressures. Additionally, developing richer datasets collected over longer periods and tied to everyday experiences will be key to improving the reliability of these systems [3][4].

Conclusion

The advancements discussed highlight how multimodal approaches are reshaping stress monitoring. By combining signals like heart rate, EDA, skin temperature, accelerometer data, and EEG/ECG, these systems achieve far better stress detection compared to single-sensor methods. Research consistently shows that multimodal systems deliver higher accuracy, often surpassing the typical high 80s to low 90s accuracy range of single-signal approaches. This improvement significantly reduces false alarms, making these systems more reliable for users navigating the unpredictable conditions of daily life with smartwatches or fitness trackers.

Deep learning frameworks, such as MMFD-SD, further showcase the practicality of multimodal systems by handling intermittently collected data. This makes them ideal for everyday wearables, enabling devices to alert users to rising stress levels even before they consciously recognize it. By blending advanced analytics with usability, these systems provide actionable insights that can be seamlessly integrated into daily routines.

On top of the technical advancements, AI-driven health coaching turns this data into meaningful, real-time support. Tools like Healify go beyond presenting raw numbers by offering personalized interventions. For example, wearable data is combined with broader health metrics to deliver tailored recommendations - like a quick breathing exercise before a meeting or a customized evening wind-down routine. These features help users shift from simply tracking stress to actively managing it, fostering better habits and improved sleep.

However, challenges remain in moving from prototypes to fully integrated, real-world solutions. Current datasets are often small and lack diversity, limiting the system’s ability to generalize. Future efforts must focus on creating large, diverse datasets, developing context-aware models that can distinguish stress from physical activity, and ensuring on-device processing is efficient, preserving battery life and user privacy. The path forward is clear: multimodal, AI-enhanced wearables are transforming stress and mental health care into continuous, real-world support. These tools meet people wherever they are - whether at work, at home, or on the go - helping them build healthier and more resilient lifestyles.

FAQs

How do multimodal signals enhance the accuracy of stress monitoring?

Multimodal signals improve stress monitoring by blending data from different physiological markers like heart rate variability, skin conductance, and cortisol levels. This combination creates a fuller picture of the body's stress response, leading to more accurate detection.

When these signals are analyzed together, it helps distinguish true stress reactions from false alarms, providing a clearer insight into both physical and mental health.

What are the main challenges of using multimodal signals for stress monitoring?

Multimodal stress monitoring systems come with their fair share of challenges. One major hurdle is combining data from various sensors while maintaining accuracy and reliability. This becomes even trickier when you factor in individual differences - people’s physiology and stress responses can vary significantly, making it tough to design systems that work effectively for everyone.

Another challenge lies in real-time data processing. These systems need to handle and analyze massive amounts of information quickly to deliver actionable insights without delay. On top of that, protecting user privacy and ensuring strong data security measures is essential, given the sensitive nature of the health information these systems often manage.

How does Healify use multimodal data to provide personalized health advice?

Healify taps into multimodal data - like physiological signals, activity levels, sleep habits, and stress markers - to provide health advice that's tailored to each individual. By analyzing real-time information from wearables and other sources, it detects patterns such as stress or fatigue and suggests practical ways to improve areas like sleep, nutrition, hydration, and overall wellness.

This approach transforms complex health data into actionable steps, helping users enhance both their physical and mental well-being.

Related Blog Posts

Endlich die Kontrolle über Ihre Gesundheit übernehmen

Endlich die Kontrolle über Ihre Gesundheit übernehmen

Endlich die Kontrolle über Ihre Gesundheit übernehmen